Technical SEO: Everything You Need to Know

This post will cover in detail the various elements of technical SEO, providing you with the knowledge and tools to optimize your website for maximum visibility and performance.

Technical SEO is about making your site accessible and understandable to crawlers. It stands as the crucial foundation upon which all other SEO efforts are built.

Without a solid technical SEO strategy, even the most compelling content and robust link-building campaigns can fall flat. A technically sound website provides the necessary foundation for effective on-page optimization.

It’s the behind-the-scenes work that ensures your website is easily crawlable, indexable, and understandable by search engines like Google.

What Is Technical SEO?

Technical SEO refers to the process of optimizing your website’s technical elements to make it easier for search engine crawlers to find, crawl, understand, and index your content.

The elements that contribute to a Technical SEO strategy are:

- Crawlability

- Indexability

- Site Structure

- Breadcrumbs Navigation

- URL Structure

- XML Sitemaps

- Robots.txt

- Meta Robots Tags

- Canonical Tags

- Duplicate Content

- PageSpeed

- HTTPS

- Pagination vs. Infinite Scrolling

- Structured Data

- Broken Links

- Implementing Hreflang

In this post, we will cover how to optimize all of these elements and more.

Why Is Technical SEO Important?

Search engines like Google use automated crawlers to explore and index websites. These crawlers need to be able to easily access and understand your content. Technical SEO ensures that your website is structured in a way that allows them to do just that.

Optimizing Technical SEO leads to:

Improved Crawlability And Indexability

If your content isn’t accessible to search engines, it simply won’t rank. Think of Technical SEO as creating a clear roadmap for search engine crawlers. Properly configured Technical SEO elements guide crawlers through your website, ensuring that all important pages are discovered and indexed.

Enhancing User Experience

Technical SEO has a direct impact on user experience:

- Faster Loading Speeds:

Optimizing site speed is a critical aspect of Technical SEO. Users expect websites to load quickly, and slow loading times can lead to frustration and high bounce rates. Faster websites provide a better user experience, encouraging visitors to stay longer and explore more pages. - Mobile-Friendliness:

With the majority of internet users accessing websites on mobile devices, having a mobile-friendly website is essential. Technical SEO ensures that your website is responsive and provides a seamless experience across all devices. - Secure Browsing:

Technical SEO elements like HTTPS provide a safe and secure browsing experience. This builds trust in users. Our guide on Google E-E-A-T And How It Affects SEO explains how these factors contribute to Google’s evaluation of your site.

Boosting Organic Traffic and Rankings

As discussed above, PageSpeed and mobile-friendliness are vital for a good user experience. Not only that, but they are also confirmed ranking signals. So optimizing for these elements leads to improvement in search engine rankings.

This is why Technical SEO plays a vital role in the overall SEO strategy. After all, the goal of SEO is to drive organic traffic to your website.

Search engines prioritize websites that provide a positive user experience and are easy to crawl and index. By optimizing your website’s technical infrastructure, you can improve your search engine rankings and attract more organic traffic.

In a competitive online environment, a website with strong Technical SEO is more likely to outperform competitors in search engine results. This can lead to increased visibility, traffic, and conversions.

Avoiding Costly Mistakes

Optimizing Technical SEO helps avoid costly mistakes:

- Duplicate Content Issues: Technical SEO helps prevent duplicate content issues, which can confuse search engines and harm your rankings.

- Crawl Errors: Technical issues can prevent search engine crawlers from accessing your website, leading to crawl errors and reduced visibility. Regularly monitoring your website for crawl errors is essential.

Crawlability

Crawlability refers to a search engine’s ability to find, access and navigate a site’s content. Search engine bots, or crawlers, systematically explore the web by following links. They need to be able to efficiently discover and analyze your pages to index them in search results.

Here’s why Crawlability is a vital part of Technical SEO:

1. Discovery of Content

Without proper crawlability, search engines won’t be able to find your valuable content. This means those pages won’t be indexed and won’t appear in search results, leading to lost traffic.

2. Efficient Indexing

Crawlability directly impacts how efficiently search engines can index your website. A well-structured website allows crawlers to navigate and index all essential pages.

3. Improved Search Engine Rankings

Search engines prioritize websites that are easy to crawl and index. By optimizing crawlability, you can improve your website’s chances of ranking higher in search results.

4. Budget Optimization

Search engines allocate a “Crawl Budget” to each website. Optimizing crawlability allows search engines to use their crawl budget more efficiently, crawling more of your important pages.

To improve the crawlability of your site, you can focus on optimizing the following elements:

Site Structure and Navigation

Site structure refers to the way your website’s pages are organized and linked together. It’s the hierarchical arrangement of your content, designed to make it easy for users and search engine crawlers to find what they’re looking for.

A logical and well-structured website architecture ensures that all pages are interconnected and easily accessible. Use clear and consistent internal linking to guide crawlers through your website.

Learn about pillar pages and the topic cluster model to understand how to plan for strategic content initiatives. Search engine crawlers need to efficiently navigate and understand your website’s content. Pillar pages and the topic cluster model, with their clear internal linking structure, facilitate this by creating a well-organized and easily crawlable site architecture.

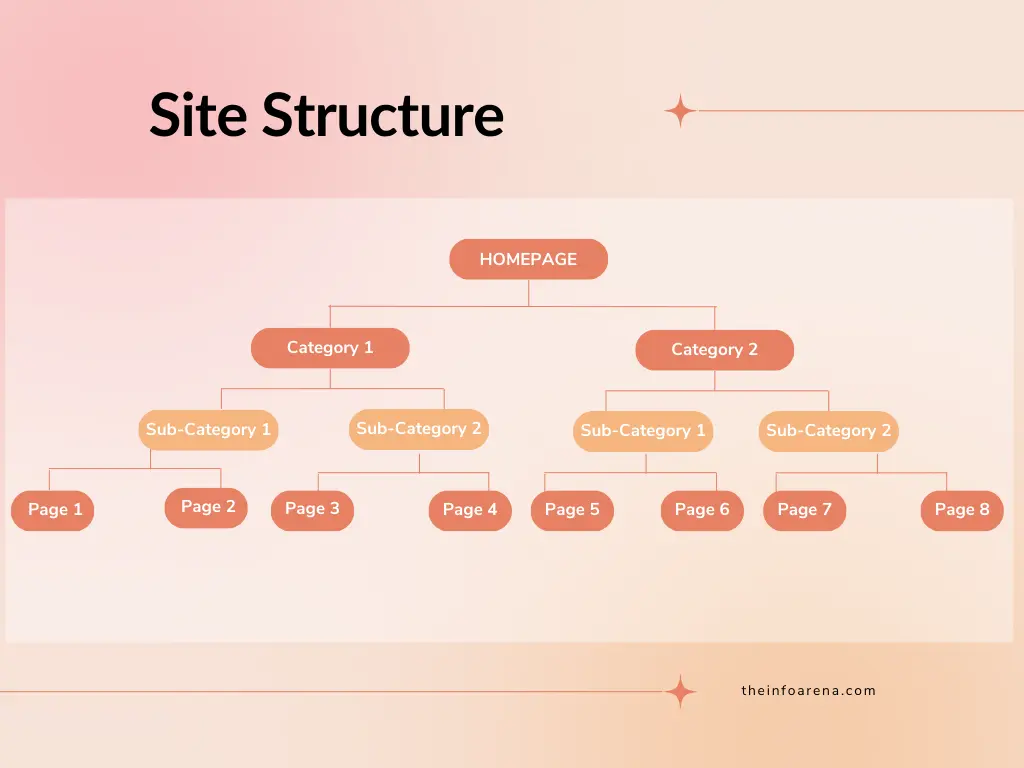

As shown in the above image, it’s vital to have a logical and hierarchical Site Structure in place. Here, the homepage links to the category pages. The category pages are linked to the sub-category pages, which are then linked to the individual pages on the site.

Planning a site structure in this way ensures that all of a site’s pages are within a few clicks from the homepage and from each other. Also, a well-planned site structure ensures that there are no orphan pages.

Orphan pages are pages with no internal links pointing to them, which makes it difficult for search engine crawlers and users to find them.

Here’s how you can optimize your Site Structure:

- Plan Your Site Architecture:

- Before building your website, plan your site architecture and create a sitemap.

- Use Categories and Subcategories:

- Group related content into categories and subcategories to create a logical hierarchy.

- Implement a Clear Navigation Menu:

- Use a clear and consistent navigation menu that is easy to understand and use.

- Optimize Internal Linking:

- Use relevant anchor text and links to related pages to improve crawlability and distribute link equity.

- Regularly Audit Your Site Structure:

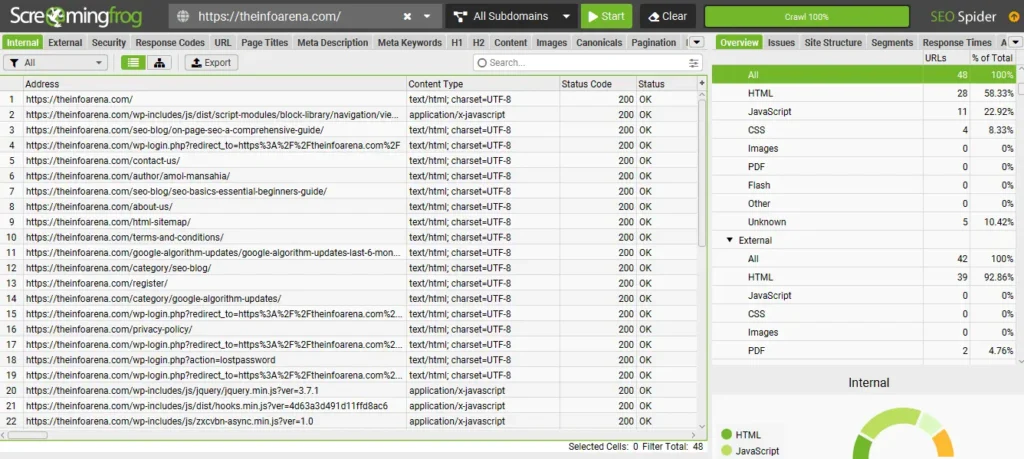

- Use tools like Screaming Frog, Semrush or Google Search Console to identify and fix any issues with your site structure.

Implement Breadcrumbs Navigation

Breadcrumbs Navigation refers to a secondary navigation system that shows the user’s location on a website and indicates the page’s position in the site hierarchy.

Typically displayed horizontally at the top of a page, it helps users understand and explore a site effectively.

A user can navigate all the way up in the site hierarchy, one level at a time, by starting from the last breadcrumb in the breadcrumb trail. We highly recommend that you implement Breadcrumbs Navigation.

The benefits of implementing Breadcrumbs Navigation are:

- Improved User Experience (UX): They provide a quick and easy way to navigate back to previous pages or higher-level categories, reducing frustration and bounce rates.

- Enhanced Crawlability: Breadcrumbs create a clear internal linking structure, making it easier for search engine crawlers to understand the site’s architecture. This improves crawlability and indexability, as crawlers can efficiently follow the breadcrumb trail to discover and index relevant pages.

- SEO Boost: By creating a well-defined internal linking structure, breadcrumbs help distribute link equity throughout the website.

- Reduces Bounce Rate: By providing simple navigation back to higher-level pages, users are less likely to leave a site if they find themselves on a page that does not meet their needs.

Logical URL Structure

A URL (Uniform Resource Locator) is the web address that users type into their browsers to access a specific page on your website. Your URL structure refers to the organization and format of these addresses.

A well-defined URL structure is clear, concise, and reflects the content of the page. URL optimization contributes significantly to both user experience and search engine visibility.

Other benefits of URL optimization:

- Enhanced Crawlability: Search engine crawlers use URLs to discover and index a site’s content. A logical URL structure makes it easier for crawlers to understand the hierarchy and context of your pages.

- Search Engine Ranking Boost: Search engines use URLs to understand the relevance of a page to a specific search query.

Keyword-rich URLs can improve your website’s ranking for relevant keywords. - Enhanced User Experience (UX): A good URL structure leads to better readability as well as better memorability. Clear and descriptive URLs help users understand what to expect on a page before they even click on it. This improves user experience and reduces bounce rates.

Follow these principles to create a logical and effective URL Structure:

- Keep it Concise: Use short, descriptive URLs that are easy to read and understand.

- Use Keywords: Incorporate relevant keywords into your URLs, but avoid keyword stuffing.

- Use Hyphens (-) for Separation: Use hyphens to separate words in your URLs for better readability and crawlability.

- Avoid Special Characters: Avoid using special characters like underscores (_) or ampersands (&) in your URLs.

- Avoid Dynamic Parameters: Minimize the use of dynamic parameters (e.g., ?id=123) in your URLs.

- Use Lowercase Letters: Use lowercase letters for all characters in your URLs.

- Maintain Consistency: Maintain a consistent URL structure throughout your website.

- Reflect Site Hierarchy: Use a URL structure that reflects the hierarchy of your website. For example: domainname.com/category/subcategory/page-name.

XML Sitemaps

For Improved Crawlability, submit an XML sitemap to search engines to help them discover and index your website’s pages. A sitemap is an XML file that provides search engines with a list of all the pages on your website.

It acts as a roadmap, guiding search engines to discover and index your content efficiently. You can use tools like Sitemap Generators or, when using WordPress, plugins like Rank Math and Yoast SEO to help you generate Sitemaps.

XML sitemaps help search engine crawlers discover and index your website’s content more efficiently, especially for large or complex websites.

Benefits of XML Sitemaps:

- Faster Indexing: XML sitemaps can help search engines index your content faster. This is particularly beneficial for new websites or websites with frequently updated content.

- Prioritizing Content: XML sitemaps allow you to specify the relative importance of your pages, helping search engines prioritize which pages to crawl and index.

- Discovering New Content: XML sitemaps help search engines discover new content, such as blog posts or product pages, as soon as they’re published.

- Helps with websites with poor internal linking: If a website has poor internal linking and some pages are orphaned, a sitemap will help search engines find these pages.

While submitting XML sitemaps, ensure that you don’t include broken links, redirects and non-canonical URLs. Also, XML sitemaps have limits on the number of URLs they can contain (50,000 URLs). If you have more URLs, create multiple sitemaps.

Robots.txt

Use robots.txt to instruct search engine crawlers on which pages or sections to crawl and which to avoid.

Generally, you can find your website’s Robots.txt at https://www.domain.com/robots.txt

Robots.txt helps you:

- Control Crawl Budget: Search engines allocate a “crawl budget” to each website, limiting the number of pages they crawl. Robots.txt allows you to direct crawlers to your most important pages, ensuring they don’t waste time on irrelevant or low-value content.

- Prevent Indexing of Duplicate Content: You can use robots.txt to prevent search engines from indexing duplicate content. This ensures that only your valuable content is indexed.

- Block Access to Sensitive Information: Robots.txt can be used to block crawlers from accessing sensitive information, such as admin pages or internal search results. This helps protect your website from unauthorized access.

- Improve Site Speed: By blocking crawlers from accessing unnecessary files, you can reduce the load on your server and improve site speed.

Avoid accidentally blocking important pages or directories that you want search engines to index.

Additionally, broken links and redirect chains can hinder crawlability and waste crawl budget. Regularly check for and fix any broken links or redirect issues.

Indexability

Simply having crawlable content isn’t enough. Your website’s pages must also be indexable to appear in search engine results.

Indexability refers to a web page’s ability to be indexed by search engines. An index is the massive database that search engines use to provide search results. If a page isn’t indexable, it won’t appear in search results, no matter how well it’s optimized for other SEO factors.

Indexability ensures that search engines can understand, analyze, and store your content in their index, making it discoverable to users searching for relevant information.

Here’s why Indexability is a vital part of Technical SEO:

1. Visibility in Search Engine Results Pages (SERP)

Without indexability, your website’s pages won’t appear in search results, rendering your SEO efforts futile.

2. Accurate Representation of Content

Indexing allows search engines to understand the content and context of your pages, ensuring they’re displayed to users searching for relevant information.

3. Improved Search Engine Rankings

Search engines prioritize websites with high-quality, indexable content. By optimizing indexability, you can improve your website’s chances of ranking higher in search results.

4. Avoiding Duplicate Content Issues

Proper indexation helps search engines understand which version of a page is the canonical (original) version, preventing duplicate content issues.

To improve the indexability of your site, you can focus on optimizing the following elements:

Meta Robots Tags

Meta robots tags are snippets of HTML code placed within the <head> section of your web pages. They communicate with search engine crawlers, telling them whether to index a page, follow its links, or both.

Meta robots tags help you with:

- Controlling Indexation: They allow you to prevent sensitive or duplicate content from appearing in search results.

- Managing Crawl Budget: By using nofollow, you can prevent search engines from crawling low-priority links, conserving crawl budget.

- Customizing Search Snippets: They provide control over how your pages appear in search results snippets.

- Preventing caching: They prevent the search engines from saving a cached copy of a page.

Canonical Tags

At times, search engines can struggle to determine which version of a page is the “original,” leading to diluted ranking signals and potential penalties. That’s where canonical tags come in. They are a crucial tool for managing duplicate content and ensuring your preferred version of a page is indexed.

A canonical tag (rel=”canonical”) is an HTML element that specifies the “canonical” or preferred version of a web page. It tells search engines which URL should be considered the primary version when multiple pages have similar or identical content.

Canonical Tags help you with:

- Preventing Duplicate Content Issues: Canonical tags prevent search engines from indexing multiple versions of the same content, avoiding confusion and potential penalties.

- Consolidating Ranking Signals: They consolidate ranking signals from duplicate pages to the canonical version, improving its visibility in search results.

- Improving Crawl Budget Efficiency: By indicating the canonical version, you guide search engine crawlers to focus on indexing the most important pages, optimizing crawl budget.

- Syndicated Content: If you syndicate your content, canonical tags can point back to the original source.

To implement Canonical Tags, add the rel=”canonical” link element and place the canonical tag within the <head> section of your HTML code. Use the href attribute to specify the URL of the canonical version.

Things to avoid while implementing Canonical Tags:

- Using Relative URLs: Always use absolute URLs to avoid errors.

- Using Multiple Canonical Tags: Each page should have only one canonical tag.

- Blocking the Canonical URL: Ensure the canonical URL is not blocked by robots.txt or meta robots tags.

- Using Canonical Tags on Paginated Pages: Use the rel=next and rel=prev attributes for paginated pages.

Find and Fix Indexing Issues

Use Google Search Console to monitor indexing errors and identify any issues that may be affecting indexability.

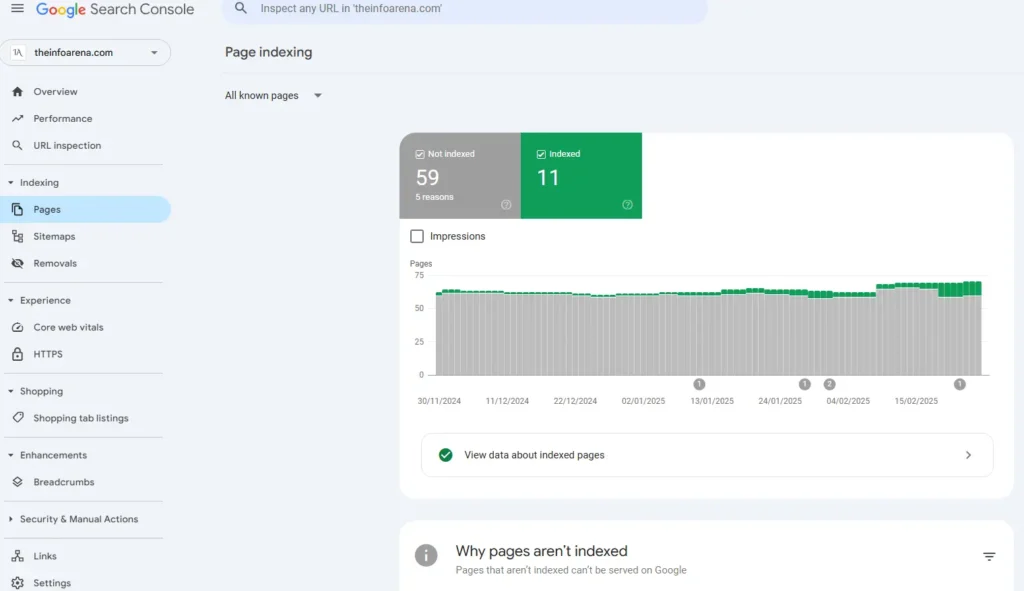

The Page Indexing section of the Google Search Console provides crucial insights into the status of your website’s indexability, helping you identify and fix issues that could be hindering your SEO performance.

The report provides a clear overview of:

- The number of pages Google has successfully indexed.

- The number of pages Google has attempted to index but couldn’t, along with reasons why.

- You’ll see specific reasons why pages weren’t indexed.

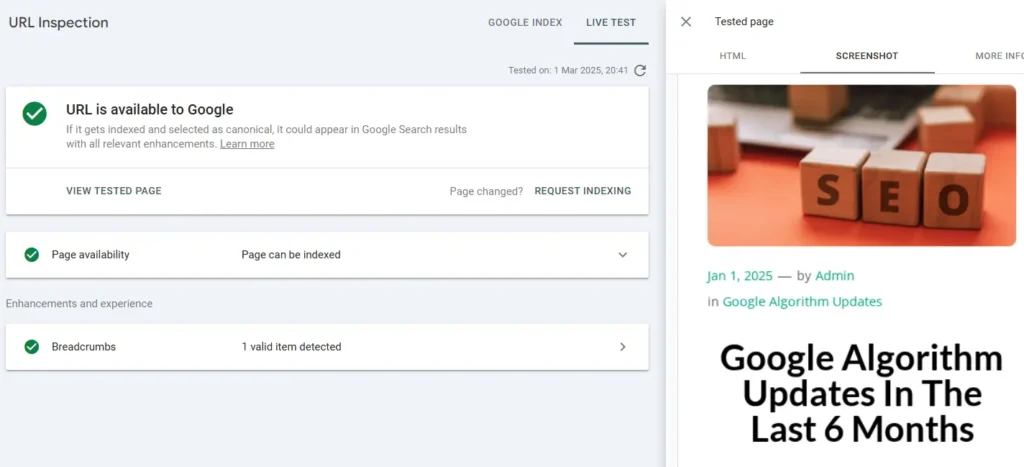

The URL Inspection Tool of the Google Search Console is particularly useful. It provides granular, page-level data on how Google sees your URLs, making it an essential tool for troubleshooting indexing issues and understanding your website’s performance.

This tool lets you:

- Check Indexing Status: See if a specific URL is indexed by Google.

- Request Indexing: If a page isn’t indexed, you can request Google to crawl and index it.

- View Crawl Information: Get details about the last time Google crawled the URL, including the crawl date and time.

- Identify Indexing Issues: Discover why a page might not be indexed, such as:

- Robots.txt blocking

- Canonicalization problems

- Page errors

- Mobile usability issues

- View Canonical URLs: See which URL Google considers the canonical version of a page.

- Mobile Usability: Check if the inspected URL is mobile-friendly.

- Enhancements: See information about structured data.

Focus on fixing the most critical issues first, such as server errors or blocked pages. After making changes, use the “Validate Fix” option in Search Console to ask Google to recrawl and reindex your pages.

Other tools you can use to find and fix indexing issues are:

- Screaming Frog SEO Spider: This desktop crawler analyzes your website’s structure, identifying broken links, duplicate content, and other technical SEO issues that can affect indexing. It is excellent for auditing large websites and shows you the HTTP status codes that your site’s pages are returning.

- Ahrefs Webmaster Tools/Site Audit: This tool crawls your website and provides a comprehensive report of technical SEO issues, including indexing problems and information about orphaned pages.

- SEMrush Site Audit: SEMrush’s Site Audit tool crawls your website and provides a detailed report of technical SEO issues, including indexing errors, crawlability problems, and duplicate content.

It provides actionable recommendations for fixing identified issues.

Duplicate Content

Duplicate content is a common but often overlooked issue that can significantly hinder your website’s performance.

Duplicate content refers to blocks of content within or across domains that either completely match other content or are appreciably similar. Search engines struggle to determine which version is the “original,” leading to various SEO problems.

Duplicate Content can lead to the following issues:

- Diluted Ranking Signals: Search engines don’t know which version to rank, so ranking signals get split between the duplicate pages.

- Wasted Crawl Budget: Search engines waste resources crawling multiple versions of the same content instead of discovering new, valuable pages.

- Indexing Issues: Search engines might choose to index only one version, or none at all, potentially hiding important content from search results.

- User Experience: Duplicate content can confuse users, leading to a poor browsing experience.

Here’s how you can fix duplicate content issues:

- 301 Redirects: Redirect duplicate URLs to the preferred (canonical) version.

- Canonical Tags: Use the rel=”canonical” tag to tell search engines which URL is the original.

- Consistent Internal Linking: Ensure internal links point to the canonical versions of your pages.

- Avoid Syndicating Exact Duplicate Content: If you must syndicate content, make sure to use canonical tags pointing back to the original source.

WWW vs. Non-WWW: Choosing Your Preferred Website Version

Search engines treat www.domain.com and domain.com as two distinct websites. If both versions are accessible, it can lead to duplicate content issues, diluted ranking signals, and wasted crawl budget.

Therefore, it’s essential to choose one version as your preferred (canonical) version.

Historically, the WWW version was more common. However, in modern SEO, there’s no inherent advantage to either version. The most important thing is to be consistent and ensure that all internal and external links point to your preferred version.

If you decide to use the non-WWW version, you’ll need to implement 301 redirects to send users and search engines from the WWW version to the root version.

This can be done via editing the .htaccess file, adding code to your server configuration file, using Your Hosting Provider’s Control Panel or using a WordPress plugin.

PageSpeed

PageSpeed, the measure of how quickly your web pages load, plays a crucial role in both user experience and search engine rankings.

PageSpeed is a critical element of improving user experience and is also a confirmed ranking signal for both desktop searches and mobile searches.

Google uses the mobile version of a site’s content, crawled with the smartphone agent, for indexing and ranking. This is called mobile-first indexing, so optimizing for mobile devices is strongly recommended.

Optimizing PageSpeed impacts crawl budget. Faster loading times allow search engine crawlers to crawl more pages within their allocated crawl budget. This ensures that all your important content is discovered and indexed.

Optimizing PageSpeed leads to higher conversion rates. On faster websites, users are more likely to complete a purchase or sign up for a service, as they don’t have to wait for pages to load.

Google’s core ranking systems look to reward content that provides a good page experience. Google recommends that site owners seeking to be successful with their ranking systems should not focus on only one or two aspects of page experience.

Instead, check if you’re providing an overall great page experience across many aspects. To self-assess your content’s page experience, Google recommends answering the following questions:

- Do your pages have good Core Web Vitals?

- Are your pages served in a secure fashion?

- Does your content display well on mobile devices?

- Does your content avoid using an excessive amount of ads that distract from or interfere with the main content?

- Do your pages avoid using intrusive interstitials?

- Is your page designed so visitors can easily distinguish the main content from other content on your page?

Here are some resources that can help you measure, monitor, and optimize your page experience:

- Understanding Core Web Vitals and Google Search results: Learn more about Core Web Vitals and how they work in Google Search results.

- Search Console’s HTTPS report: Check if you’re serving secure HTTPS pages and what to fix if you’re not.

- Check if a site’s connection is secure: Learn how to check if your site’s connection is secure, as reported by Chrome. If the page isn’t served over HTTPS, learn how to secure your site with HTTPS.

- Avoid intrusive interstitials and dialog: Learn how to avoid interstitials that can make content less accessible.

- Chrome Lighthouse: This toolset from Chrome can help you identify a range of improvements to make related to page experience, including mobile usability.

Core Web Vitals is a set of metrics that measure real-world user experience for loading performance, interactivity, and visual stability of the page. Core Web Vitals metrics:

- Largest Contentful Paint (LCP): Measures loading performance. To provide a good user experience, strive to have LCP occur within the first 2.5 seconds of the page starting to load.

- Interaction to Next Paint (INP): Measures responsiveness. To provide a good user experience, strive to have an INP of less than 200 milliseconds.

- Cumulative Layout Shift (CLS): Measures visual stability. To provide a good user experience, strive to have a CLS score of less than 0.1.

Tools to Measure and Improve PageSpeed:

- Google PageSpeed Insights: Provides detailed reports on your website’s speed and offers specific recommendations for improvement.

- GTmetrix: Another popular tool that provides an in-depth analysis of website speed and performance.

Here’s how you can improve PageSpeed:

- Optimize Images: Compress and resize images to reduce file sizes. Image formats like WebP, which often provide better compression than PNG or JPEG, mean faster downloads and less data consumption.

- Minify and Combine Files: Minify JavaScript and CSS files to remove unnecessary characters and combine multiple files into fewer requests.

- Enable Browser Caching: Leverage browser caching to store static assets.

- Use a CDN: Implement a CDN to distribute your content across multiple servers.

- Choose a Fast Web Host: Select a reliable web hosting provider with fast servers.

- Lazy Loading: Implement lazy loading for images and videos to load them only when they’re visible in the viewport.

- Reduce HTTP Requests: Minimize the number of HTTP requests by combining files and using CSS sprites.

HTTPS

Use HTTPS (Hypertext Transfer Protocol Secure) to encrypt communication between your website and users’ browsers. HTTPS is the secure version of HTTP, the protocol used to send data between a web browser and a website.

HTTPS encrypts the communication, preventing unauthorized access and ensuring data integrity. HTTPS signals to users that your website is secure and trustworthy.

HTTPS preserves referral data, allowing you to track where your website traffic is coming from.

Moreover, Google has been using HTTPS as a ranking signal since 2014. If you are running a website on an HTTP domain, we highly recommend that you migrate to HTTPS.

Here’s how you can implement HTTPS:

- Obtain an SSL Certificate: You can purchase an SSL certificate from a reputable certificate authority (CA). Many web hosting providers offer free SSL certificates.

- Install the SSL Certificate: Follow your hosting provider’s instructions to install the SSL certificate on your server.

- Update Internal Links: Change all internal links on your website from HTTP to HTTPS.

- Implement 301 Redirects: Set up 301 redirects to automatically redirect users from HTTP to HTTPS versions of your pages.

- Update External Links (Where Possible): Where you have control, update external links to your site to the HTTPS version.

- Update Sitemap and Google Search Console: Update your XML sitemap to reflect the HTTPS URLs. Add the HTTPS version of your website to Google Search Console.

- Check for Mixed Content: Use tools like Screaming Frog or your browser’s developer tools to check your website for mixed content (HTTP resources on an HTTPS page) and update them to HTTPS.

Pagination vs. Infinite Scrolling

When it comes to displaying large sets of content, website owners often face the choice between pagination and infinite scrolling. Both methods have their pros and cons, especially from a Technical SEO perspective.

Pagination

Pagination divides content into distinct pages, typically with numbered links at the bottom.

SEO Advantages:

- Clear URL structure: Each page has a unique URL, making it easier for search engines to crawl and index.

- Control over content: You can optimize each paginated page for specific keywords.

- Easier navigation for users: Users can easily navigate to specific pages.

SEO Disadvantages:

- Can lead to many shallow pages, which can be seen as thin content.

- Requires more clicks to access deeper content.

Infinite Scrolling

Infinite scrolling loads content continuously as the user scrolls down the page.

SEO Advantages:

- Enhanced user engagement: Users can browse content seamlessly without clicking to new pages.

- Better for mobile experience: Infinite scrolling is often preferred on mobile devices.

SEO Disadvantages:

- Crawlability issues: Search engines may struggle to crawl and index all the content loaded via infinite scrolling.

- URL fragmentation: It can be difficult to create unique URLs for each section of content.

- Javascript dependency: Relying on javascript to load content can cause indexing issues.

- Footer issues: It can be very difficult for a user to reach a website’s footer.

Ultimately, you need to consider your target audience and the type of content you’re displaying.

- Infinite scrolling is often preferred for visual content or social media feeds.

- Pagination is preferred for ecommerce category pages.

While infinite scrolling can enhance user engagement, pagination is generally more SEO-friendly. If you choose infinite scrolling, careful implementation is crucial to avoid crawlability and indexability issues.

Structured Data

Google defines Structured Data as a standardized format for providing information about a page and classifying the page content. Structured data helps search engines understand the context and meaning of your content, leading to richer search results and improved visibility.

Benefits of Implementing Structured Data:

- Rich Snippets: Structured data enables search engines to display rich snippets, such as star ratings, product prices, and event details, in search results. These rich snippets can increase click-through rates and drive more traffic to your website.

- Improved Understanding of Content: Structured data helps search engines understand the context and meaning of your content, ensuring that it’s displayed to users searching for relevant information.

- Voice Search Optimization: Structured data can help improve your website’s visibility in voice search results, as it provides clear and concise information that voice assistants can easily understand.

- Knowledge Graph Enhancement: Structured data can help your website’s information appear in Google’s Knowledge Graph, increasing your brand’s visibility and authority.

Here’s how you can Implement Structured Data:

- Identify Relevant Schema: Determine the appropriate schema for your content from Schema.org.

- Create JSON-LD Markup: You can use a structured data markup generator. If you use WordPress, plugins like Yoast SEO can help you with structured data implementation. Alternatively, you can create the JSON-LD code manually.

- Add the Markup to Your Page: Place the JSON-LD code within the <script type=”application/ld+json”> tag in the <head> or <body> section of your HTML.

- Test Your Markup: Use Google’s Rich Results Test or Schema Markup Validator to ensure that your markup is correctly implemented.

- Monitor Your Results: Use Google Search Console’s Rich Results report to monitor your structured data performance.

Broken Links

Broken links, also known as dead links or 404 errors, are hyperlinks on your website that no longer point to a valid destination. This means that when a user clicks on them, they encounter an error page instead of the intended content.

Here’s how Broken Links affect Technical SEO:

- Crawlability and Indexability: Broken links act as roadblocks, preventing crawlers from reaching valuable pages. This can lead to incomplete indexing and reduced visibility in search results.

- Wasted Crawl Budget: Search engines allocate a limited “crawl budget” to each website. When crawlers encounter broken links, they waste time and resources trying to access non-existent pages. This diverts crawl budget away from important working pages.

- Site Architecture and Navigation: A well-structured website provides a clear and logical navigation path for users and crawlers. Broken links disrupt this path, creating dead ends and hindering the flow of your website.

- HTTP Status Codes: Broken links typically result in 404 (Not Found) errors, which are HTTP status codes. An excessive number of 404 errors can signal to search engines that your website is poorly maintained.

Here’s how you can find and fix Broken Links:

- Google Search Console: The “Coverage” report in Google Search Console provides valuable insights into crawl errors, including 404 errors. Regularly monitor this report to identify and address broken links.

- Screaming Frog SEO Spider: This powerful desktop crawler can scan your entire website and identify broken links. It provides detailed reports on 404 errors and other HTTP status codes.

- Ahrefs and SEMrush Site Audits: These popular SEO tools include site audit features that detect broken links and provide actionable insights.

- Fixing the Links:

- 301 Redirects: If a page has moved, use a 301 redirect to send users and crawlers to the new URL.

- Replace with Working Links: If possible, replace the broken link with a link to a relevant, working page.

- Remove the Link: If there’s no suitable replacement, remove the broken link entirely.

Implementing Hreflang: Targeting Global Audiences

Hreflang is an HTML attribute that tells search engines which language and regional variations of a page to display to users. It helps search engines understand the relationship between different language versions of your content and prevents them from treating them as duplicate content.

Here’s how implementing Hreflang impacts your Technical SEO:

- Targeting Global Audiences: Hreflang ensures that users see the most relevant version of your website based on their language and location. This improves user experience and increases engagement.

- Preventing Duplicate Content Issues: When you have multiple language versions of the same content, search engines may see them as duplicates. Hreflang tags help search engines understand that these are intended variations, not duplicates.

- Improving Search Engine Rankings: By serving the correct language version, you can improve your website’s relevance and visibility in search results for specific regions and languages.

- Managing International SEO: For websites with a strong international presence, hreflang is essential for managing and optimizing content for different markets.

Here’s how you can implement Hreflang:

- Determine Language and Region Codes: Use language codes (e.g., “en” for English, “es” for Spanish) and country codes (e.g., “US” for United States, “CA” for Canada) and combine them for language-region targeting (e.g., “en-GB” for English in Great Britain).

- Choose an Implementation Method:

- HTML Link Tags: Add rel=”alternate” and hreflang attributes to the <head> section of your HTML.

- HTTP Headers: Use the Link HTTP header to specify hreflang tags.

- XML Sitemap: Include hreflang information in your XML sitemap.

- Use Absolute URLs: Always use absolute URLs in your hreflang tags.

- Implement Return Tags: Ensure that each language version links back to all other language versions, including itself.

- Use the “x-default” Tag: Use the hreflang=”x-default” tag to specify a fallback page for users whose language or region is not specifically targeted.

- Validate Your Implementation: Use tools like hreflang checkers or Google Search Console’s International Targeting report to validate your hreflang implementation.

Conclusion

I want to conclude by thanking you for reading the post. By prioritizing Technical SEO, you’re investing in the long-term health and visibility of your website.

A well-optimized technical foundation empowers your content to reach its full potential, driving organic traffic, enhancing user experience, and ultimately achieving your business goals.

The important thing to remember is that Technical SEO is not a one-time fix but an ongoing process. Regular audits, monitoring, and adjustments are essential to maintaining optimal performance.

Don’t be intimidated by the technical aspects. Start with the basics, gradually implement improvements, and leverage the wealth of tools and resources available.

To learn about the various elements of On-Page SEO and how to optimize them for users and search engines, read our comprehensive guide on On-page SEO.

We’re here to support you on your SEO journey – feel free to reach out with any questions!